I need to work on a data privacy project and so am spending some time to review some notes I wrote couple years ago. I guess it would be a great exercise to reorganize them into a post series. And this will be my first post of this series. 🙂

Introduction to data privacy

From the point of view of data privacy, we can categorize attributes of a data into three different groups: key attributes, sensitive attributes, and quasi-identifiers. Let’s use medical data as example. Key attributes include attributes such as patient name and social security number. Sensitive attributes can be the diseases the patient possesses. And quasi-identifiers can be zip code and salary range and so on. To protect the patients’ privacy, simply removing key attributes from the database alone is not sufficient. Because a malicious user may take advantage of other prior knowledge to uniquely identify the owner of a record and/or infer sensitive information from the quasi-identifiers.

For example, consider an extremely small data set as shown below. Assume that this is some patient dataset in a very small community. In the table below, ID and name are essentially the key attribute, age and gender are the quasi-identifiers, and the information whether the patient has aids or not is the sensitive attribute.

| ID | Name | Age | Gender | AIDS? |

| 0001 | Janice | 39 | F | No |

| 0002 | Sam | 44 | M | No |

| 0003 | Paul | 28 | M | Yes |

| 0004 | Alice | 25 | F | Yes |

| 0005 | Jody | 30 | F | No |

If we are going to disclose the data set for research purposes, of course we should remove the key attribute (ID and name) from the data set. But that is not sufficient. Knowing Alice is in her 20s and from the latter three columns alone will imply that she is likely to have AIDS.

Therefore, to ensure the privacy of records’ owners, we cannot always return a truthful answer for any queries. All privacy-ensuring techniques essentially end up introducing distortion to the query answers. Roughly speaking, we can categorize the privacy-ensuring techniques into two classes: pre-query randomization and post-query randomization. For pre-query randomization, we essentially construct a “fake” database to serve as a surrogate in replace of the original one. This can be achieved by adding fake records, deleting true records, distorting records, suppressing some attributes and generalizing some attributes of records. Many privacy methodolgies such as ![]() -anonymization and

-anonymization and ![]() -diversity are proposed along this line of thought. For example, in terms of

-diversity are proposed along this line of thought. For example, in terms of ![]() -diversity, the conditional entropy of a sensitive attribute

-diversity, the conditional entropy of a sensitive attribute ![]() given any quasi-identifier

given any quasi-identifier ![]() ,

, ![]() , is larger than

, is larger than ![]() bits. Note that the conditional entropy

bits. Note that the conditional entropy ![]() here essentially measures the randomness of the sensitive attribute

here essentially measures the randomness of the sensitive attribute ![]() given

given ![]() in terms of number of bits. Therefore, the larger the

in terms of number of bits. Therefore, the larger the ![]() , the harder to predict

, the harder to predict ![]() from

from ![]() . The advantage of this class of methods is that we can allow users to utilize the data set directly and non-interactively. However, as users are going to use the surrogate data set directly for their studies, the surrogate data set should have all statistics closed to the original one. The latter, of course, is very hard to be guaranteed.

. The advantage of this class of methods is that we can allow users to utilize the data set directly and non-interactively. However, as users are going to use the surrogate data set directly for their studies, the surrogate data set should have all statistics closed to the original one. The latter, of course, is very hard to be guaranteed.

If we restrict the queries that can be made by the users, rather than distorting the data set itself, we may distort the query results afterward. The post-query randomization approach is suitable to data sets that only allow interactive access. An example of this approach is the Laplace mechanism for differential privacy. Even though differential privacy can be made possible in non-interactive setting as well, we will restrict ourselves to interactive setting in this post. In other words, we assume that users cannot directly access the data set but can only make queries regarding the data set.

Differential Privacy

Differential privacy is based on the premise that the query result of a “private” data set should not change drastically with an addition or deletion of a single record. Otherwise, there can be a chance for malicious users to infer the identity or sensitive information of a private record. Note that differential privacy is a rather strong condition since it does not assume the methods a malicious user could use in extracting that information. Differential privacy does not try to limit what records can or cannot exist in a data set. Actually, the definition is blind to (independent of) the actual data inside the data set. To ensure that the statistics of query results do not change drastically with adding or deleting a record, the query outcome is typically distorted by the addition of noise. As a result, the query outcome function ![]() even for a fixed data set

even for a fixed data set ![]() should be considered as a random variable.

should be considered as a random variable.

Therefore, if ![]() is private, for any two data sets

is private, for any two data sets ![]() and

and ![]() where one data set is equal to the other one with an additional record, we want

where one data set is equal to the other one with an additional record, we want

![]()

for all subset ![]() of range

of range ![]() . The above formulation can be more precisely characterized with the following definition.

. The above formulation can be more precisely characterized with the following definition.

Definition:  -Differential Privacy

-Differential Privacy

The query ![]() is

is ![]() -differentially private if and only if for any subset

-differentially private if and only if for any subset ![]() of the range of

of the range of ![]() ,

,

(1) ![]()

where ![]() and

and ![]() are any two data sets where they differ by at most one record and one data set is a subset of the other.

are any two data sets where they differ by at most one record and one data set is a subset of the other.

Since (1) has to be held by all “adjacent” data sets ![]() and

and ![]() (including when

(including when ![]() and

and ![]() are swapped), (1) apparently can only be satisfied when

are swapped), (1) apparently can only be satisfied when ![]() or

or ![]() .

.

Connection of Differential Privacy with Information Theory

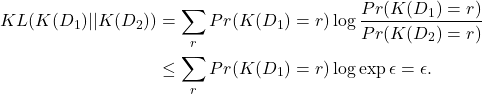

A necessary condition for differential privacy is that the K-L divergence ![]() has to be less than

has to be less than ![]() for any “adjacent”

for any “adjacent” ![]() and

and ![]() . Since

. Since ![]() for any singleton set

for any singleton set ![]() , therefore

, therefore

(2)

Note that K-L divergence ![]() essentially measures the difference between the distributions of

essentially measures the difference between the distributions of ![]() and

and ![]() . Thus, the smaller the

. Thus, the smaller the ![]() , the harder to distinguish between

, the harder to distinguish between ![]() and

and ![]() from query

from query ![]() and so more difficult to identify the individual record that differs

and so more difficult to identify the individual record that differs ![]() and

and ![]() .

.

What next?

In the next post, we will talk about two privacy mechanisms to achieve differential privacy. Namely, the Laplace mechanism and the exponential mechanism.