This is the third post of my data privacy series. Please refer to the first post for the definition of differential privacy and the second post for privacy mechanism.

Robustness of differential privacy versus processing

If a mechanism ![]() is

is ![]() -differential private to begin with, then further operation on

-differential private to begin with, then further operation on ![]() is still

is still ![]() -differential private.

-differential private.

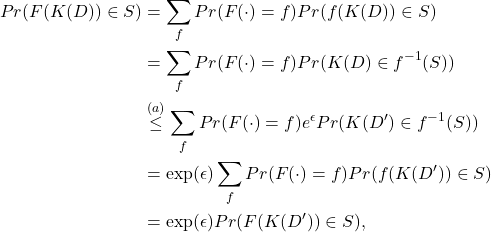

Proof:

Let’s assume the operation ![]() applying on the output of

applying on the output of ![]() is stochastic as deterministic operation is just a special case. Then, we have

is stochastic as deterministic operation is just a special case. Then, we have

(1)

where ![]() and

and ![]() are “adjacent” data sets and

are “adjacent” data sets and ![]() is due to the fact that

is due to the fact that ![]() is

is ![]() -differential private. Therefore,

-differential private. Therefore, ![]() is

is ![]() -differential private.

-differential private.

Actually, the above property should be apparent from the beginning. Because if this is not satisfied, the definition of privacy is meaningless since the “private” information could disclose unauthorized information simply through some malicious processing.

On the other hand, if multiple mechanisms are sequentially explored, we expect that the privacy of the overall mechanism (as a sequential combination of the multiple access) should reduce. We have this very simple lemma as follows.

Lemma (Sequential Composition):

If we have two mechanisms ![]() and

and ![]() are

are ![]() – and

– and ![]() -differential private, respectively, the overall mechanism is

-differential private, respectively, the overall mechanism is ![]() -differential private provided that the two mechanisms are independent.

-differential private provided that the two mechanisms are independent.

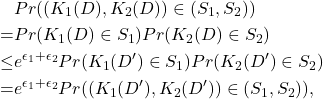

Proof:

(2)

where ![]() and

and ![]() are “adjacent” data sets.

are “adjacent” data sets.

One can of course generalize the above lemma to combination of many mechanisms by applying it repeatedly.

We can also compose multiple mechanisms in parallel where each member mechanism can only access a disjoint portion of the data set. Then, we have the following lemma.

Lemma (Parallel Composition):

If we have two mechanisms ![]() and

and ![]() applying on disjoint subsets of a data set and

applying on disjoint subsets of a data set and ![]() and

and ![]() are

are ![]() -differential private, then the overall mechanism is

-differential private, then the overall mechanism is ![]() -differential private provided that the member mechanisms are independent.

-differential private provided that the member mechanisms are independent.

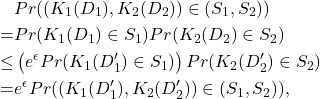

Proof:

Let ![]() and

and ![]() are “adjacent” data sets. Since they are adjacent, we cannot have both

are “adjacent” data sets. Since they are adjacent, we cannot have both ![]() and

and ![]() . Without loss of generality, assume

. Without loss of generality, assume ![]() and

and ![]() . Then,

. Then,

(3)

Again, the parallel composition lemma can obviously be generalized to the case with more than two member mechanisms.

Example 1 (Disclosing gender population sizes of a group):

Consider that we have a data set containing only the gender attribute and we disclose the population sizes of each gender. To ensure the data set is differentially private, one can add laplacian noise to each population size before disclosing. Let ![]() and

and ![]() denote the mechanism of outputing the population for the males and females, respectively. If each mechanism is

denote the mechanism of outputing the population for the males and females, respectively. If each mechanism is ![]() -differential private, the combined mechanism is also

-differential private, the combined mechanism is also ![]() -differential private. Note that we have parallel composition here since the gender populations are disjoint.

-differential private. Note that we have parallel composition here since the gender populations are disjoint.

Example 2 (Disclosing disease population sizes of a group):

Consider a similar setting as the previous example but we try to disclose the population sizes of a number of diseases, e.g., AIDS, SARS, lung cancer, etc. Again, we add laplacian noise to each population size before disclosing such that each disclosing mechanism is ![]() -differentially private. Let say we consider

-differentially private. Let say we consider ![]() different diseases here and we will disclose the population size for each of them. The overall mechanism in this case will be

different diseases here and we will disclose the population size for each of them. The overall mechanism in this case will be ![]() -differentially private instead of

-differentially private instead of ![]() -differentially private. Note that we have sequential composition here rather than parallel composition since the diseases populations are generally not disjoint (e.g., one can have both AIDS and lung cancer).

-differentially private. Note that we have sequential composition here rather than parallel composition since the diseases populations are generally not disjoint (e.g., one can have both AIDS and lung cancer).